The Practical A/B Test Prioritisation Methods

Before you run an A/B test, you should prioritize your hypothesis. If you won't, you might start with a less effective experiment.

PIE framework

One framework for prioritizing testing opportunities is the PIE framework by WiderFunnel. To prioritize which sites to test and in what order, consider three criteria:

- Potential

- Importance

- Ease

Potential

How much further can the pages be improved? Although I've yet to come across a page that doesn't have room for improvement, you can't test everything simultaneously, so focus on your poorest performers. Your web analytics data, customer data, and expert heuristic analysis of user scenarios should be considered.

Importance

What is the worth of the traffic to the pages? The pages that receive the most traffic and spend the most money are the ones that are most important to you. You may have identified pages that perform terribly, but they aren't testing priorities if they don’t have a significant volume of costly traffic.

Ease

How difficult would it be to implement the test on the page or template? The degree of difficulty an experiment will take to get running on a page is the final consideration, which includes technical implementation and organizational or political barriers. The less time and money you have to spend to get the same result, the better. This refers to both technological and "political" convenience. A page that appears to be technically simple may contain many stakeholders or vested interests, which might create roadblocks.

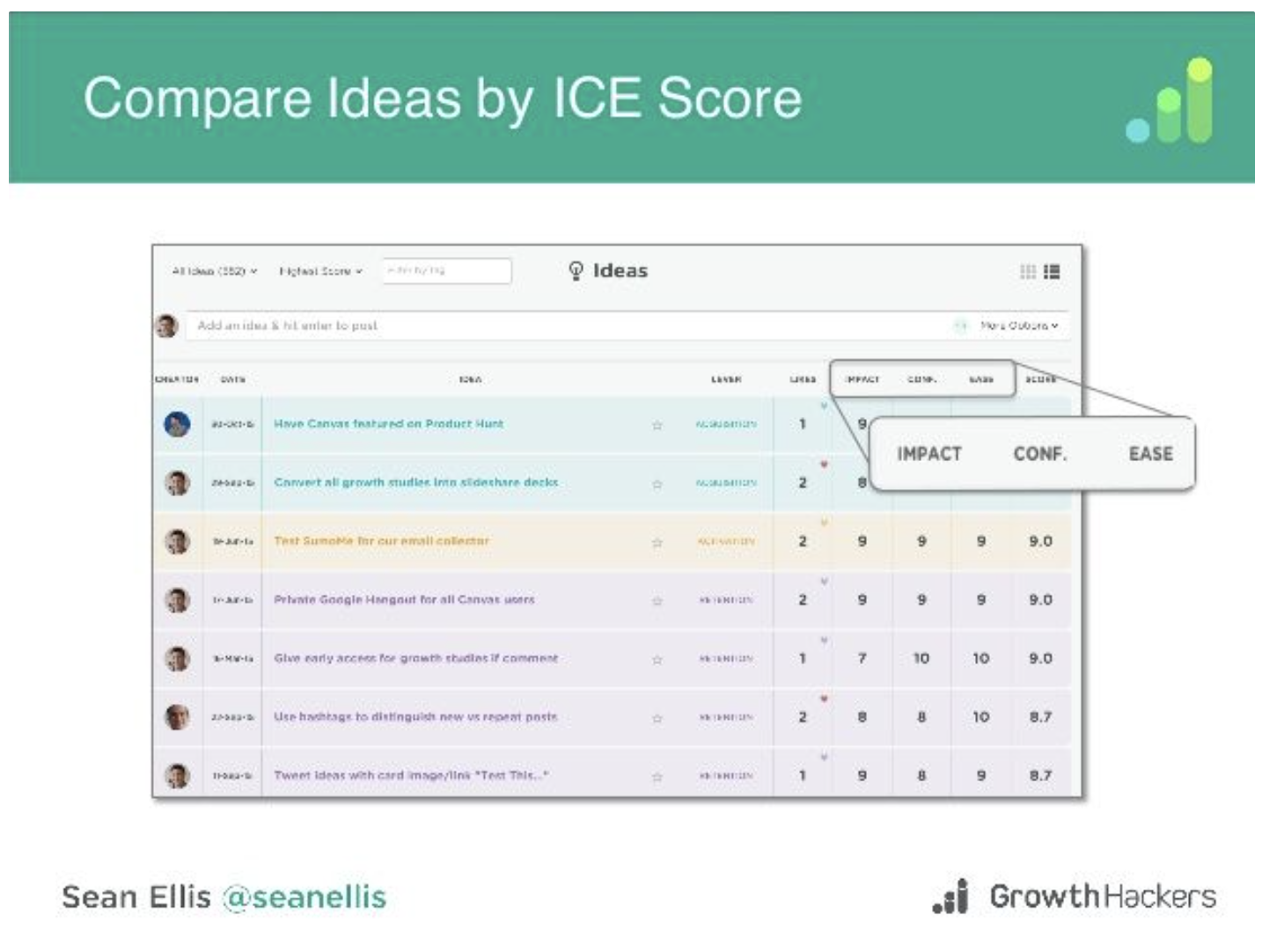

ICE Model by GrowthHackers

The PIE model is pretty similar to this. You've got some test ideas. You now rate Impact, Confidence, and Ease on a scale of one to ten.

The priority score is calculated by multiplying each score by three.

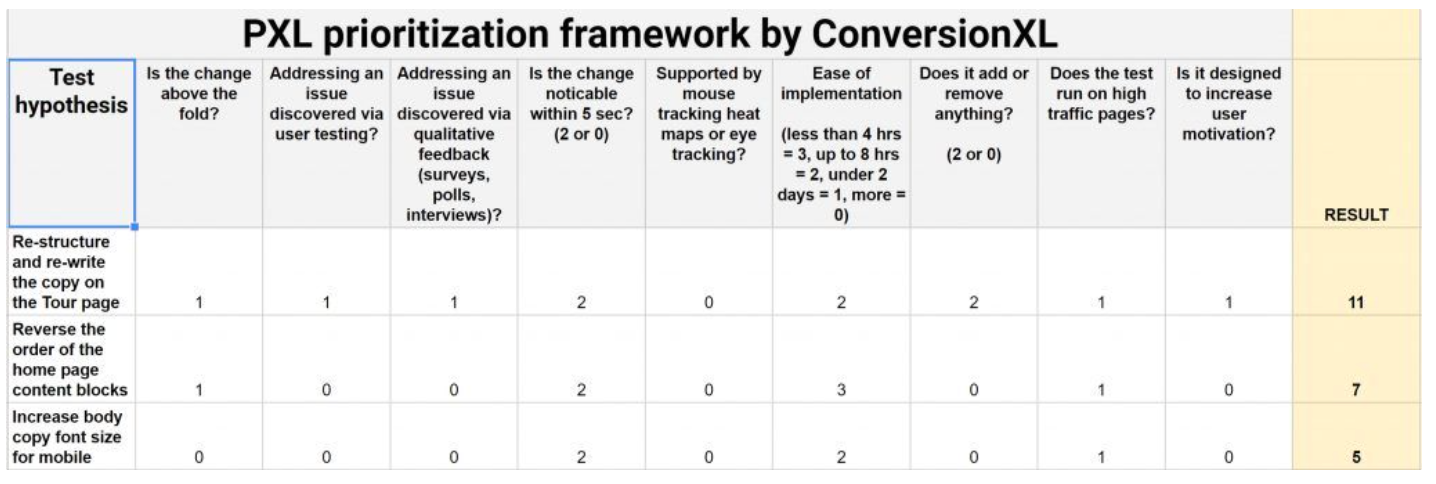

PXL model by CXL

This paradigm eliminates the need to test ideas with effect, potential, or confidence scores. Assuming you have a crystal ball, assigning a prospective score to a concept is a stretch.

This technique asks a set of objective yes/no questions and assigns a score to each topic depending on the answers. The amount of data available to support the proposal, the visibility and nature of improvements, and the ease of implementation are used to decide the ratings for answers.