How to improve the performance of data models in Power BI?

With the performance analysis, we learned how to analyze potential performance issues when your report is presented to the end-user. So what actions can we take to resolve these problems? I'll talk about that in this essay.

If you haven't read my article about how performance analysis is done, I recommend you go to this page and read it.

Solve Problems and Improve Performance

Your performance analysis identifies opportunities for performance improvement and areas for improvement. You might discover that you need to improve the images, the DAX query, or other elements in your data model.

You can make modifications by using the following information to understand better what to look for.

Visualizations

If you determine that the images have a performance issue causing poor performance, you must find a way to improve performance while minimizing the impact on user experience.

A report page's performance improves when there are fewer visuals present. Ask yourself whether an image is necessary and whether it adds value to the end-user.

If the response is no, you ought to take this picture down. Instead of utilizing many images on the page, think about using drill-through pages and report page tooltips to convey more information.

Examine the number of fields in each image. The more images you have in the report, the more likely a performance issue will occur. Furthermore, the greater the number of images, the more likely the report will appear crowded and lose clarity.

The upper limit for images is 100 fields (measures or columns), so images with more than 100 fields will load slowly. Think about whether you need all of that information in a single image. You could find that using fewer domains will improve your situation.

DAX Query

You can see how long it takes the Power BI Desktop engine to process each query (in milliseconds) when you check the results in the performance analysis pane. A DAX query that takes more than 120 milliseconds to execute is the best place to begin. In this example, you specify a single query with a long duration.

Performance analysis pinpoints potential problems but doesn't offer suggestions for fixing them. It would help if you investigated why this metric takes so long to process. You can use DAX Studio to dig deeper into your queries.

For example, by selecting Copy Query, you can copy the calculation formula to the clipboard and then paste it into Dax Studio. Then you can go over the calculation step in greater detail.

Data Model

If metrics and images show low duration values, this is not the cause of performance issues. Instead, if the DAX query returns a high duration value, it is likely that a measure was mistyped or that there was a problem with the data model.

Your model's relationships, columns, or metadata may cause this issue, or the Automatic date/time option, as discussed in the section below, may also be to blame.

Relationships

To ensure that you establish the appropriate associations, you should evaluate the relationships between your tables. Make sure the relationship cardinality properties are configured correctly.

For example, a one-sided column with unique values might be misconfigured as a multi-sided column.

Columns

It's a good idea to avoid importing data columns that you won't use. It would help if you tried to deal with columns at source when putting data into Power BI Desktop to avoid eliminating them in the Power Query Editor.

When an unnecessary column is removed, the data model shrinks, resulting in smaller file size and a faster refresh time. Furthermore, overall report performance is enhanced because the dataset only contains pertinent data.

Metadata

Information regarding other data is known as metadata. Power BI metadata includes each column's name, data type, and format, database schema, report design, file last modified, data refresh rates, and so on. Includes details about your data model, like its.

When analyzing your metadata, look for unnecessary columns, data errors, incorrect data types, the volume of uploaded data, and so on. Analyzing your metadata will improve the performance of your data model.

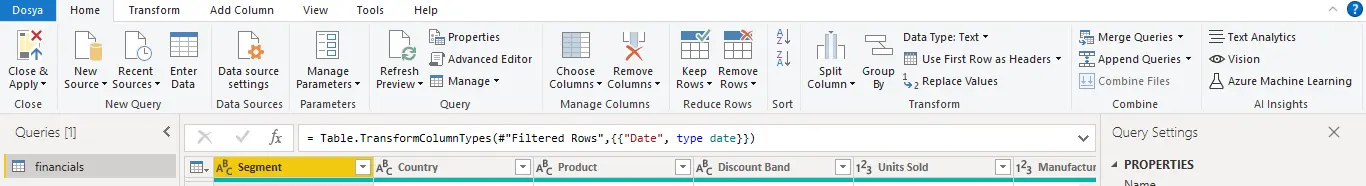

The Power Query Editor in Power BI Desktop can be used to examine columns, rows, and raw data values. You can make the necessary changes using the available tools, such as those highlighted in the screenshots below.

The Power Query options are as follows:

- Redundant columns - Determines the need for each column. Use the Remove Columns option on the Home tab to eliminate any columns that won't be needed for the report and are therefore redundant.

- Redundant rows - Check the top few rows of the dataset to determine whether they may be eliminated using the Remove Parent Rows option on the Home tab, whether they are empty or contain data you don't need for your reports.

- Data type - Checks each column data type to ensure it is correct. Change a wrong data type by navigating to the Convert tab, choosing the Data Type option, and then choosing the appropriate data type from the list if you discover one.

- Query names - Examine the tables' names (queries) in the Queries pane. Similar to column header names, query names that are unusual or unhelpful should be changed for ones that are more precise or recognizable to the user. After making necessary changes to the name by right-clicking on a query and selecting Rename, you can rename that query by pressing the Enter key.

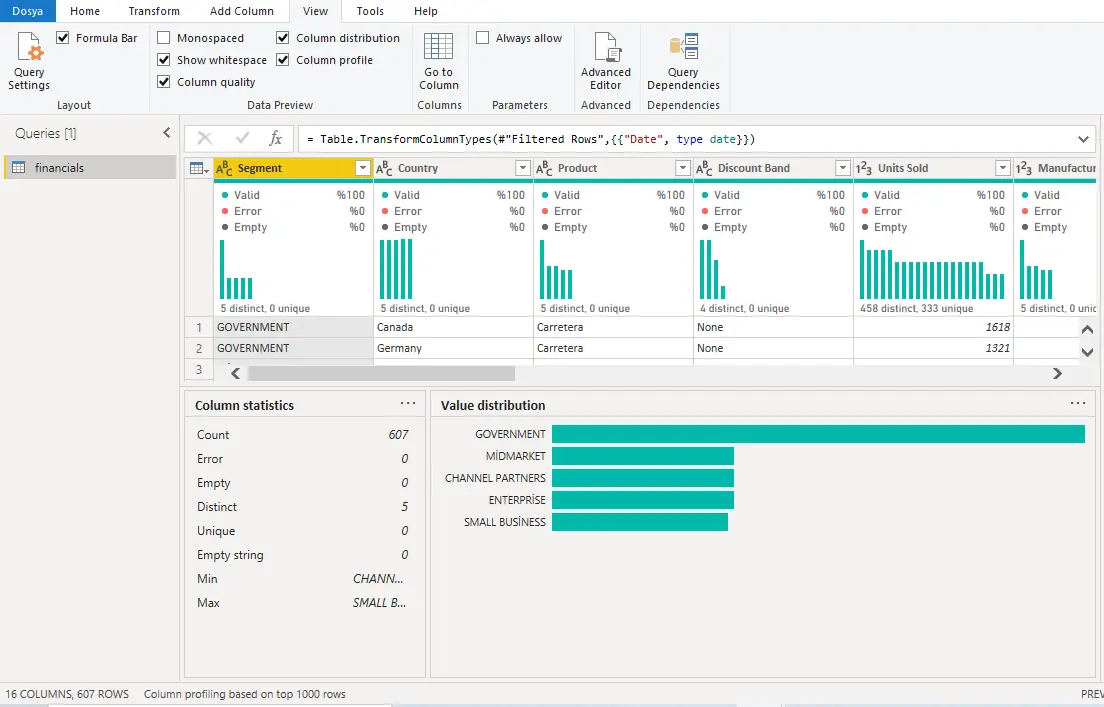

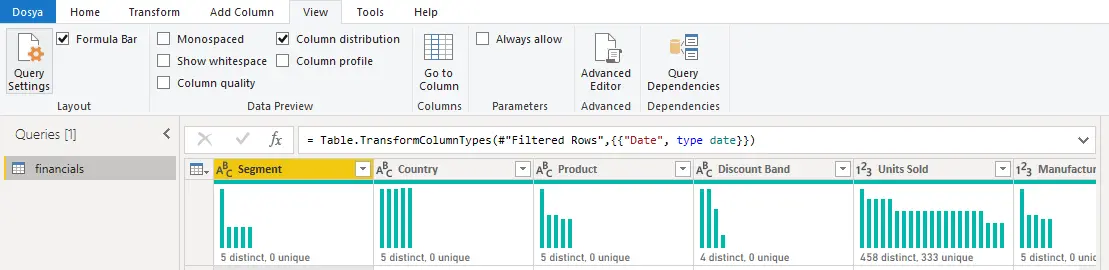

- Column details - The Power Query Editor provides three data preview options for analyzing the metadata associated with your columns. These choices can be found under the Appearance tab, as shown in the screenshot below.

- Column quality - The proportion of items in a column that are correct, have errors or are empty. If the percentage of valid ones is less than 100, you should look into the problem, correct any mistakes, and fill in the blanks.

- Query names column distribution -Establishes how many of your things are unique and how many are distinct. When establishing a column's cardinality, this information is helpful.

- Column profile - Displays additional statistics for the column and a graph depicting the distribution of unique items.

Other metadata to consider include data model-wide information such as file size and data refresh rates. This metadata is contained in the Power BI Desktop (.pbix) file. The VertiPaq storage engine compresses and saves the data you upload to Power BI Desktop. A smaller data model uses less memory and speeds up image rendering, calculations, and data refresh in reports.

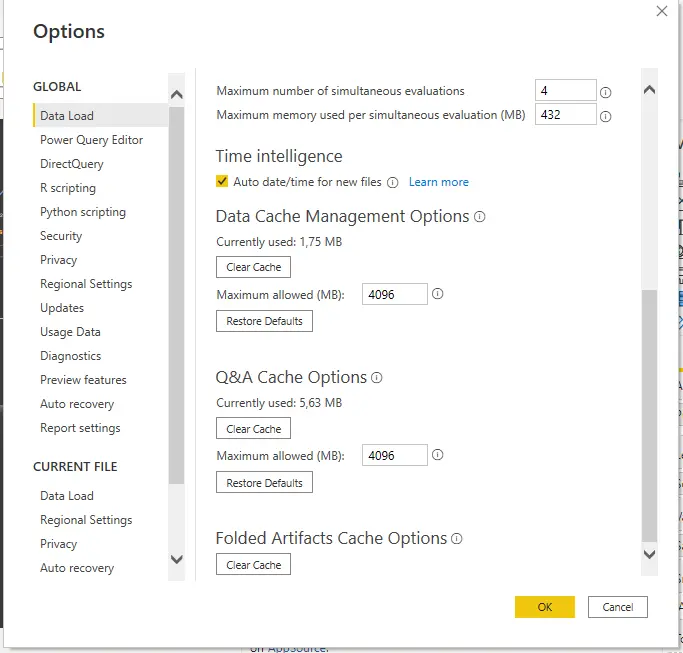

Automatic Date/Time Feature

Another thing to consider when trying to improve performance is the Automatic date/time option in Power BI Desktop. This feature is enabled globally by default.

Disable the global Automatic date/time option and use this table to specify time consistently across your company if your data source already has a date dimension table. Disabling this option may result in a smaller data model and a quicker refresh time.

To enable or disable the Automatic date/time option, navigate to File> Options and settings>>Options and select the General or Current File page. On one of these pages, select Data Loading, and then select or clear the checkbox in the Intelligent Time Display section as needed.

Using Variables to Improve Performance and Troubleshooting

Using variables in DAX formulas can make your computations simpler and more effective.

The advantages of using variables in your data model are as follows:

- Improved performance - Because Power BI reduces the need to evaluate the same expression repeatedly, variables can improve the efficiency of measures. You can get the same query results almost half the time.

- Improved readability - Variables have short self-explanatory names instead of ambiguous multi-word expressions. When variables are used, formulas may become easier to read and understand.

- Easier debugging - Variables may be used to debug formulas and test expressions, which may be useful during troubleshooting.

- Less complexity - Variables do not necessitate using the difficult-to-understand DAX functions EARLIER or EARLIEST. Before the invention of variables, these functions were necessary, and their complex expressions introduced new filter contexts. You can write less complex formulas now that you can use variables instead of these functions.

A Sample

The table below displays the measure definition in two ways to demonstrate how you can use it to improve the efficiency of a measure. The expression calculating "same period last year" is repeated in the formula, but in two different ways: the first uses the standard DAX calculation method, and the second uses variables.

The advanced measure definition is shown in the table's second row. The VAR keyword is used in this definition to present a variable named SalesPriorYear. An expression assigns the result "same period last year" to this new variable. The variable is then used twice in the RETURN statement.

- Without variable

Sales YoY Growth = DIVIDE ( ( [Sales] - CALCULATE ( [Sales], PARALLELPERIOD ( 'Date'[Date], -12, MONTH ) ) ), CALCULATE ( [Sales], PARALLELPERIOD ( 'Date'[Date], -12, MONTH ) ) )

- With variable

Sales YoY Growth = VAR SalesPriorYear = CALCULATE ( [Sales], PARALLELPERIOD ( 'Date'[Date], -12, MONTH ) ) VAR SalesVariance = DIVIDE ( ( [Sales] - SalesPriorYear ), SalesPriorYear ) RETURN SalesVariance

As a result of Power BI having to analyze the same expression twice, the formula in the table's initial measure definition is inefficient. The second definition is more effective due to the variable because it only needs to once evaluate the Power BI PARALLELPERIOD expression.

Using variables can reduce query processing time in half and enhance the efficiency of your data model if it comprises several queries with multiple metrics.

Using variables to troubleshoot multiple steps

Variables can assist you in debugging a formula and determining the source of the problem. Variables make debugging your DAX calculation easier by evaluating each one separately and invoking them after the RETURN statement.

In the following example, is the test an expression assigned to a variable? To debug, temporarily rewrite the RETURN statement to write to the variable. Because the SalesPriorYear variable comes after the RETURN statement, the measure definition only returns it.

Sales YoY Growth % = VAR SalesPriorYear = CALCULATE([Sales], PARALLELPERIOD('Date'[Date], -12, MONTH)) VAR SalesPriorYear% = DIVIDE(([Sales] - SalesPriorYear), SalesPriorYear) RETURN SalesPriorYear%

The SalesPriorYear value is the only one displayed by the RETURN statement. After debugging, you can use this technique to return the expression. It also simplifies calculations due to the reduced complexity of DAX code.

Cardinality Reduction

Cardinality refers to the distinctness of values in a column. When referring to relationships between two tables, "cardinality" is also used to define the relationship's direction.

Determine column cardinality levels

In the past, statistics on the number of distinct and individual items detected in each column of the data were shown when using the Power Query Editor to analyze metadata.

- Number of distinct values - The total number of distinct values in a given column.

- Number of unique values - The total number of values displayed only once in a given column.

A column with a lot of duplicate values has a low cardinality level. A column with many unique values, on the other hand, will have a high cardinality level.

Lower cardinality improves performance, so you may need to reduce the number of high-cardinality columns in your dataset.

Improving performance by reducing cardinality levels

Power BI Desktop offers some techniques, such as summarization, to reduce the amount of data loaded into data models. Reducing the amount of data loaded into your model improves the report's relationship cardinality. This is especially important for large models or models whose size is expected to grow over time.

The most effective method for reducing model size is to use a summary table in the data source. While the detail table can contain every transaction, the summary table only contains a single record daily, weekly, or monthly. For example, this could be an average of all transactions per day.

Customize Query Reduction Options

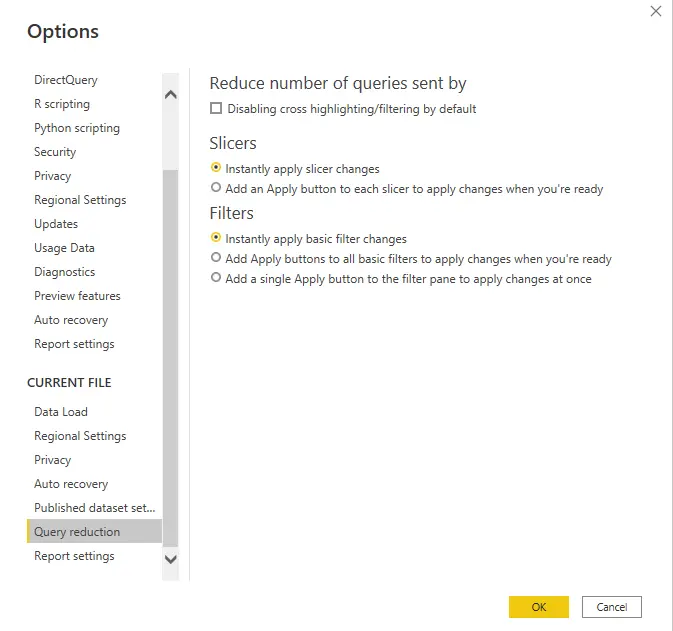

You can send fewer queries and stop some interactions in Power BI Desktop if you want to avoid giving users a bad experience if your queries take a long time to execute. Implementing these options improves performance by preventing queries from repeatedly accessing the data source.

You must alter the default values to apply the data reduction options to your model in this example. You may access the options by choosing File > Options and then scrolling to the bottom of the page.

Create and Manage Aggregations

As you collect data, you summarize it and present it at a higher level. For instance, you might compile all sales information and organize it by date, client, product, etc. Aggregation allows you to concentrate on key data and enhance query performance by reducing table sizes in the data model.

Your business may utilize aggregations in data models for the following purposes:

- When dealing with large amounts of data (big data), aggregations improve query performance and assist you in analyzing and deriving insights from that big data. Because collected data is cached, a resource partition required for detailed data is used.

- Aggregations can help you speed up the refresh process if you're experiencing a slow refresh. A smaller cache size reduces refresh time, allowing data to reach users more quickly. You refresh a smaller amount of data as opposed to millions of rows.

- Aggregations can assist you in reducing and maintaining the size of your data model if you have a large one.

- Suppose you expect your data model to grow in size in the future. In that case, you can use aggregations to future-proof it by minimizing the likelihood of performance and refresh concerns and general query issues.